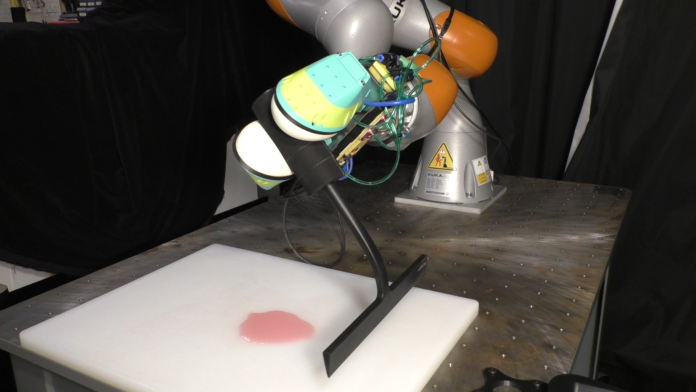

MIT CSAIL researchers have introduced a soft robotic system designed to handle grocery items with care. This innovative setup combines state-of-the-art vision systems, motor-based proprioception, soft tactile sensors, and a novel algorithm to manage a continuous flow of diverse items along a conveyor belt—all without causing damage to delicate products.

How It Works

The system utilizes an RGB-D camera that captures both color and depth information, allowing the robot to determine the size, shape, and position of items moving on the belt. Integrated soft sensors in the gripper measure pressure and deformation, providing crucial data about each object’s fragility. These inputs feed into an algorithm that calculates a “delicacy score” for every item.

For example, if a bunch of grapes and a can of soup arrive on the belt, the camera first identifies and locates both items. When the gripper picks up the grapes, the tactile sensors detect their vulnerability and the system assigns a high delicacy score, directing the grapes to a special buffer for careful handling later. In contrast, the soup—showing minimal deformation—receives a low delicacy score and is packed immediately. Once all sturdier items are safely stowed, the robot retrieves the buffered delicate items and places them on top to avoid any damage.

Testing and Performance

Researchers tested the system using a selection of ten different, previously unseen grocery items, randomly introduced on the conveyor belt. The experiment was repeated multiple times, and the success of the system was measured by the number of instances where heavy items were incorrectly placed atop delicate ones. The results were promising:

- The new system produced nine times fewer damaging actions compared to a sensorless baseline that relied solely on pre-programmed motions.

- It also reduced damage by 4.5 times when compared to a vision-only method that lacked tactile feedback.

Items tested included delicate ones like bread, grapes, muffins, and clementines, as well as sturdier items like soup cans, coffee, cheese blocks, and ice cream containers.

Future Possibilities

While the current method for determining an item’s delicacy is somewhat basic, the researchers see room for improvement through enhanced sensing and more sophisticated grasping techniques. This initial success could pave the way for broader applications in retail, online order fulfillment, moving, or even recycling—areas where handling a wide range of object types is essential.

Experts in robotics have praised this work, noting that the combination of vision and tactile sensing mimics human handling and sets a new standard in the field. Although the system is still in the research phase and not yet ready for commercial deployment, its development marks a significant step toward automating tasks that require both gentle and precise manipulation.