Large language models like ChatGPT can engage in conversation, yet they lack a true understanding of the words they use. Unlike these systems, which process data abstractly, humans connect language with personal experiences—knowing “hot” through the memory of being burned.

Researchers at the Okinawa Institute of Science and Technology asked whether it’s possible for an AI to grasp language in a human-like manner. Their approach was to design a brain-inspired AI composed of several interconnected neural networks. Although this system could only acquire a small vocabulary—five nouns and eight verbs—it didn’t just memorize words; it learned the underlying concepts behind them.

Learning Like a Baby

Drawing inspiration from developmental psychology, the team modeled the AI’s learning process after that of human infants. Traditional methods, like pairing words with images or using video feeds from a baby’s perspective, fall short because infants learn by interacting with their environment—they touch, grasp, and manipulate objects. To mimic this richer experience, the researchers embedded their AI into an actual robot.

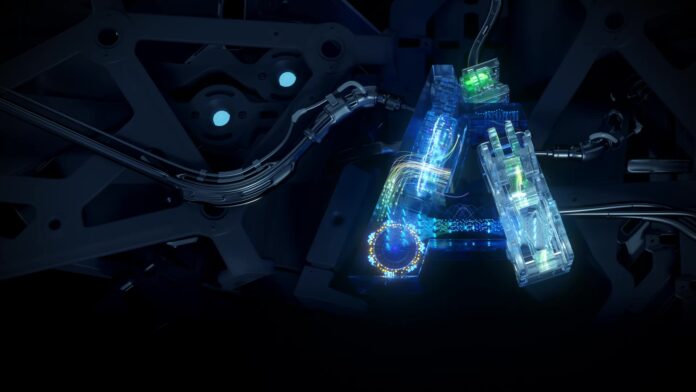

The robot was a basic setup featuring a robotic arm with a gripper and a low-resolution RGB camera (64×64 pixels). It was placed in a controlled workspace with a white table and colored blocks (green, yellow, red, purple, and blue). The robot’s task was to rearrange these blocks in response to simple commands such as “move red left” or “put red on blue.” The real challenge lay in creating an AI that could process these commands and corresponding movements in a way that mimics human thought. As lead researcher Prasanna Vijayaraghavan explained, the goal wasn’t strict biological mimicry but rather drawing inspiration from the workings of the human brain.

Integrating Perception and Action

The team’s work was rooted in the free energy principle—the idea that our brains are constantly predicting and adjusting to sensory information in pursuit of goals. This principle, linked to everything from simple actions to complex plans, is also intertwined with language. Neuroscience research has shown that when people hear action-related phrases, their motor areas become active.

To capture this in a robot, the researchers used four interlinked neural networks:

- The first processed visual input from the camera.

- The second handled proprioception, helping the robot track its own movements and build internal models for manipulating objects.

- Complementing these were modules for visual memory and attention, which ensured the robot could focus on specific objects and separate them from the background.

- A third network converted language commands into vectorized representations.

- Lastly, a fourth associative network integrated the outputs from the other three networks, mirroring how humans mentally verbalize actions even when not speaking.

Developing Compositional Understanding

The system was designed to test compositionality—a key ability for generalizing language, where individual words and actions can be combined in novel ways. As children learn to put together words to describe new experiences, the robot began to generalize. After learning the connections between certain commands and actions, it could execute instructions it had never explicitly encountered, like moving blocks based on new color and position combinations.

While previous efforts focused on teaching AIs to link words with visuals, this work incorporated proprioception and action planning, providing a more holistic approach to understanding. However, the experiment had its limitations. The robot worked within a small, controlled environment with a limited vocabulary (only color names and basic actions) and a fixed, cubic set of objects. Additionally, the AI needed to be trained on about 80 percent of all possible noun-verb combinations to effectively generalize, with performance dropping as the training ratio decreased.

Despite these challenges, the researchers believe that with more advanced hardware—beyond the single RTX 3090 GPU used for this study—it’s possible to scale the system. Their next goal is to adapt the model to a humanoid robot equipped with more sophisticated sensors and manipulators, bringing AI closer to operating effectively in the complex real world.